At Arcadia, one of our central tenets is that our science should be maximally useful. Computational tasks are embedded in all aspects of our scientific inquiry, so we need our computing strategies to be maximally useful too. What does this mean? In addition to aligning with scientific priorities across Arcadia, we believe that useful computing is innovative, usable, reproducible, and timely (jump to see how we define these key concepts).

This is easier said than done. We don’t yet know how to balance all these distinct but interdependent needs. Below we discuss our goals and most pressing challenges along with our current approaches to solve them. Over time, we expect to iterate on our solutions as we learn what works and what doesn’t. While we don’t yet have the answers, we are committed to identifying and sharing solutions that work for our organization. We hope that you’ll weigh in on our pubs to better inform our strategy and help us understand how these efforts might help you too.

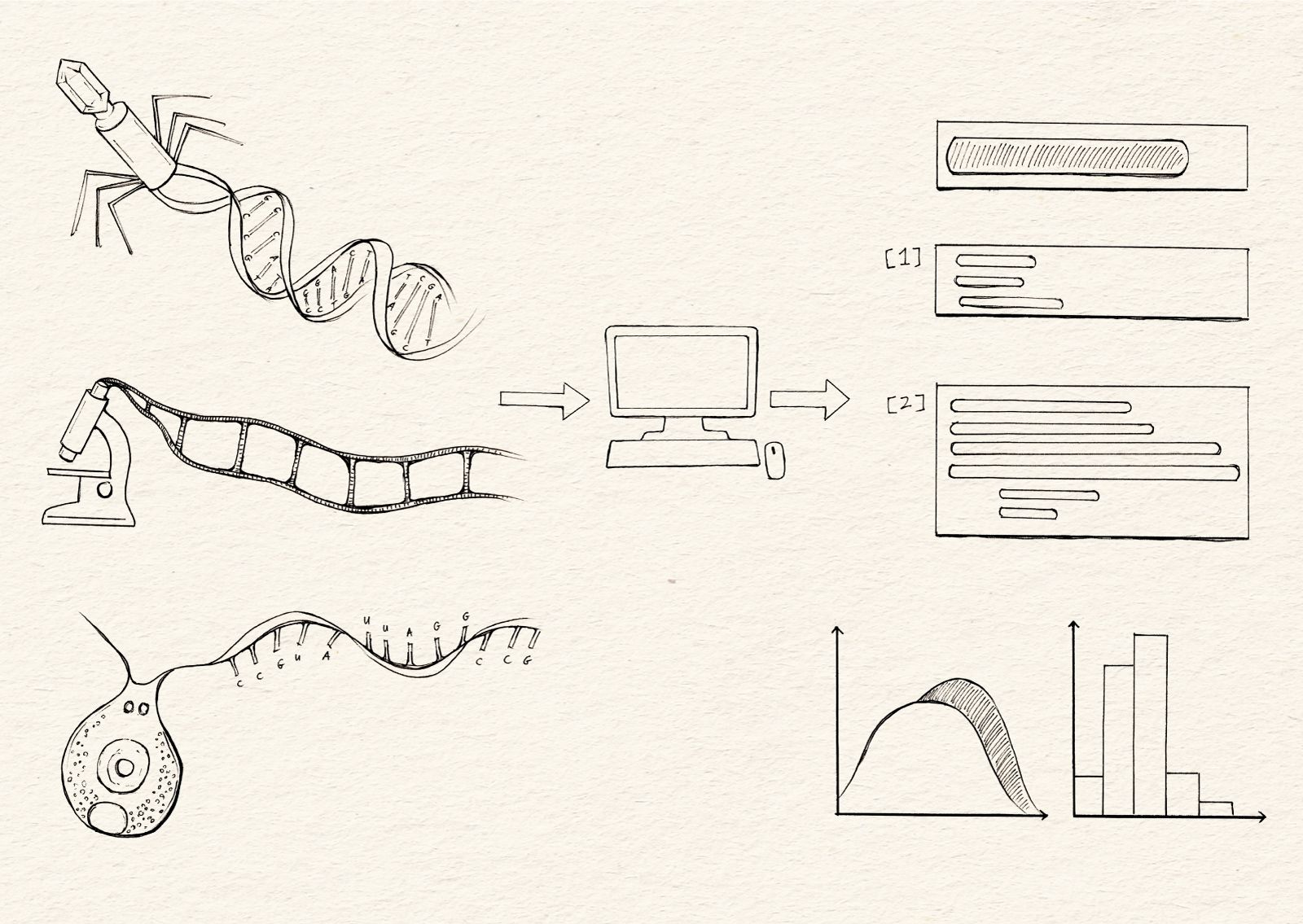

Arcadia’s software team could take on most computational tasks for the rest of our scientists. But we believe that the scientists who directly create and contribute to a project are best positioned to analyze that project’s data because of their domain expertise. Further, computational thinking has the potential to transform biology as a discipline if we’re able to integrate it well.

CHALLENGE: How can we help individual scientists build and maintain agency in computational work?

Not all scientists can be expert in all things, nor should they be. Our researchers have a wide array of scientific backgrounds, so we’re equipping them with a baseline vocabulary and skills to support a dialogue about computational science across the organization. We are also actively collaborating with scientists on their computational inquiries. The learning curve is steep, so we started an internal training group that offers short workshops and office hours for extra help as well as a Slack channel dedicated to software questions.

If done well, some of our computational technologies will broaden our imagination and spur new hypotheses. Some scientific computation will happen after projects begin and goals are already set, but we also want it to spark totally new questions and actionable ideas.

CHALLENGE: How do we create space for innovation so that we’re able to swiftly identify and pursue new leads?

Our software team is inherently collaborative, working directly with our other scientists to write and apply code for their projects. Beyond this more supportive work, we are experimenting with the best ways to foster space for exploratory, curiosity-driven computational innovation. We are starting by creating cultural clarity around this priority and making sure the software team allocates bandwidth effectively between maintenance and creative work. We are also growing Arcadia slowly so that we have the flexibility to hire as needed to test computationally-generated hypotheses in the lab.

At minimum, we want other researchers to obtain the same results as us if they run the same code using the same data and methods. We also want others to easily adapt, extend, and use our products to answer similar biological questions.

CHALLENGE: How do we scale reproducibility and usability?

Ensuring these qualities takes significant effort. For each effort, we work to define the minimum viable product, or “MVP” — the lowest threshold for reproducibility. This helps us ensure reusability while still prioritizing our time and staying on track with our big-picture science and financial sustainability goals. To determine how to make a computational product reproducible and easily usable, we consider whether there are pre-existing methods, how much tinkering is required, how often we expect to use the methodology, the size of the user base, and the infrastructure needed to run the code. In essence, we align MVPs to the impact and maturity of the problem. We work hard to make sure we don’t let perfect get in the way of good (and useful!).

We guide our decision-making about potential solutions to our challenges using the following operating principles:

-

Explore, experiment, and formalize. When possible, we explore our decision space, conduct small experiments, and formalize our decisions using the data and feedback we collect.

-

Maximize utility of computational products. Our decisions will be driven by what makes our products most usable both within and outside of Arcadia. We will iterate on this in a data-driven way.

-

Ship focused computational products quickly and iteratively. We encourage decisions that support fast and iterative approaches to scientific computation.

-

Remember that no decision is truly irreversible. We recognize that decisions can be changed if our original strategy no longer serves our goals. We keep this in mind to minimize analysis paralysis as we progress.

We’ve come up with a shared vocabulary that has helped us discuss and iterate on solutions for our challenges. We define some of the most important words here.

Computational products: Scripts, notebooks, workflows, software packages, and/or repositories that we encode and their accompanying documentation.

Useful: Our computational products are helpful in addressing biological problems.

Usable: The ability for someone to use our computational products with relatively little activation effort – for example, by following the documentation, and without a substantial time or monetary investment, someone inside or outside of Arcadia can use our code.

Repeatable: The ability for someone to repeat the scientific analysis we have encoded and achieve the exact same results as we produced.

Reproducible: The ability for someone to repeat the scientific analysis we encoded on similar but new data and achieve a similar biological result, or to use an orthogonal analysis on the same data and achieve a similar biological result.

Open: Computational products, data, and explanations that are freely available with clear licenses for reuse, redistribution, and reproduction. To maximize the usefulness of our science, our goal is to make our tools as open as possible while respecting the licenses of the underlying software and our translational goals.

Iterative: A style of computational product development where the product is improved by repeated review, testing, and deployment on real problems.

To support usability and reproducibility in our computing projects, we created baseline guidelines to aid in the development of clear and organized code, proper data management, and effective documentation. These guidelines are meant to be simple and approachable, meeting our scientists where they are.

To reduce the burden of following these guidelines, we rely heavily on templates and training. We have implemented an internal training program called the “Arcadia Users Group” (AUG) to roll these guidelines out and help computationally level-up our organization. We’re working on a perspective piece that will go into more detail about this work.

A key strategy for streamlining repeatable computational tasks for our scientists is to use a workflow orchestration framework. We selected Nextflow to help create reproducible pipelines for our standardized computational tasks. We highlight our decision-making process in a perspective pub:

Creating reproducible workflows for complex computational pipelines

A workflow orchestration framework can streamline repeatable tasks and make workflows broadly usable. From several options, we chose Nextflow due to the ease of deploying across platforms, vibrant nf-core community, and ability to manage and monitor workflows with Nextflow Tower.

In practice, each project has taken a tailored approach to creating computational products that other scientists can use and that can reproduce the results we present in pubs.

For example, a method pub about isolating phage DNA and identifying nucleosides had an accompanying GitHub repository that contained a rendered analysis notebook, pointers to the input data, and the software versions used to run the analysis. The repository showed how to process mass spectrometry data to find nucleosides.

A workflow to isolate phage DNA and identify nucleosides by HPLC and mass spectrometry

This pub details a process for phage amplification and concentration, DNA extraction, and HPLC and MS analysis of phage nucleosides. We optimized the approach with model phages known to use non-canonical nucleosides in their DNA, but plan to apply it for other phages.

Another research effort that demonstrated how to compare amoeboid crawling behavior took a similar approach by documenting the analysis in notebooks, but further created a linked Binder to enable others to interact with and re-run the available code. Binders are ephemeral compute environments that allow researchers to execute or change code drawn from GitHub repositories that themselves do not change.

Distinct spatiotemporal movement properties reveal sub-modalities in crawling cell types

Quantifying movement is a powerful window into cellular functions. However, cells can generate movement through a variety of complex mechanisms. Here, we generate a flexible framework for comparing an especially variable type of motility: cellular crawling.

Last, a computational project explored sequence attributes that correlate with protein annotations using actin as a model protein. We encoded the pipeline as a Snakemake workflow and adapted it so that researchers can upload their own actin protein sequences to see how they score for different annotation attributes.

Defining actin: Combining sequence, structure, and functional analysis to propose useful boundaries

The process of deciding whether a candidate actin homolog represents a “true” actin is tricky. We propose clear and data-driven criteria to define actin that highlight the functional importance of this protein while accounting for phylogenetic diversity.

Many of our computational tasks are routine and need to be performed multiple times by different people across Arcadia — for example, sequencing data quality control, genome assembly, and building phylogenetic trees. We chose to standardize these routine pipelines as Nextflow workflows deployed through AWS Batch. Read our pub to understand how we came to this decision.

Our first Nextflow workflow encodes a pipeline for quickly assessing the quality of new sequencing data. Our goal with this workflow is to let scientists quickly and confidently post new sequencing data to public repositories before formal biological analysis, thereby allowing other researchers to access it as soon as possible. To do this, we need to ensure that our new sequencing data doesn’t contain errors that should preclude its deposition in a public database. The seqqc workflow produces an interpretable report to assess sequence data quality.

Speeding up the quality control of raw sequencing data using seqqc, a Nextflow-based solution

seqqc is a Nextflow pipeline for quality control of short- or long-read sequencing data. It quickly assesses the quality of sequencing data so that it can be posted to a public repository before analysis for biological insights. Faster open data, faster knowledge for everyone.

Next, we developed a Nextflow workflow that assembles genomes from PacBio HiFi reads and spits out key quality control stats that tell us whether we need to do additional work before proceeding.

Streamlining genome assembly and QC with the reads2genome workflow

We want to swiftly generate genome assemblies and produce quality control statistics to gauge the need for more curation. We built a Nextflow pipeline that assembles Illumina, Nanopore, or PacBio sequencing reads for a single organism and runs QC checks on the resulting assembly.

We also designed a Nextflow workflow that performs QC, preprocessing, and profiling of metagenomic samples obtained through either Illumina or Nanopore sequencing technologies.

Quickly preprocessing and profiling microbial community sequencing data with a Nextflow workflow for metagenomics

We want to seamlessly process and summarize metagenomics data from Illumina or Nanopore technologies. We built a Nextflow workflow that handles common metagenomics tasks and produces useful outputs and intuitive visualizations.

We designed a workflow that screens genomes with gene models for horizontal gene transfer. It combines multiple existing HGT-screening algorithms to produce a set of candidate gene transfer events. The preHGT workflow can screen eukaryotic, bacterial, or archaeal genomes for horizontal gene transfer events that occur within or between kingdoms.

PreHGT: A scalable workflow that screens for horizontal gene transfer within and between kingdoms

Horizontal gene transfer (HGT) is the exchange of DNA between species. It can lead to the acquisition of new gene functions, so finding HGT events can reveal genome novelty. preHGT is a pipeline that uses multiple existing methods to quickly screen for transferred genes.

We developed a Snakemake workflow that predicts and annotates bioactive peptides from transcriptome assemblies. Peptigate predicts cleavage peptides, ribosomally synthesized and post-translationally modified peptides (RiPPs), and sORF-encoded peptides. We also provide a reduced pipeline that predicts peptides from protein sequences alone.

Predicting bioactive peptides from transcriptome assemblies with the peptigate workflow

Peptigate predicts bioactive peptides from transcriptomes. It integrates existing tools to predict sORF-encoded peptides, cleavage peptides, and RiPPs, then annotates them for bioactivity and other properties. We welcome feedback on expanding its capabilities.

Both notebooks and workflows have proven to be helpful tools for documenting and executing analyses across the organization. However, for some tasks, we found that we ended up writing similar notebooks multiple times. When we encountered this, we pulled the code out into new software tools to make it more usable and better tested.

Our first software tool is a new R package, sourmashconsumr, which provides a set of functions to analyze and visualize the outputs of the sourmash package. Our goal was to allow scientists to easily work with the outputs of sourmash in R and to provide a set of default plots to more quickly understand the content of sequencing data.

A new R package, sourmashconsumr, for analyzing and visualizing the outputs of sourmash

The sourmash Python package produces many outputs that describe the content and similarity of sequencing data. We developed a new R package, sourmashconsumr, that lets a wider range of users easily load, analyze, and visualize those outputs in R.

Most recently, we developed a Python package called plm-utils (“protein language model utilities”) that contains helper functions for working with protein language models. Our first use case for plm-utils is predicting whether a transcript is coding or non-coding. We show that our tool improves accuracy in this prediction task when applied to short open reading frames (fewer than 300 nucleotides).

Using protein language models to predict coding and non-coding transcripts with plm-utils

We explored the use of embeddings from protein language models to distinguish between genuine and putative coding open reading frames (ORFs). We found that an embeddings-based approach (shared as a small Python package called plm-utils) improves identification of short ORFs.

As we build workflows and software, we sometimes produce databases or other resources that might be helpful beyond our original use cases.

NCBI developed a clustered version of the non-redundant protein database for faster and more diverse taxonomic searches. However, it won’t be available online until fall 2023. To make use of this type of resource now, we created an equivalent database using the same clustering method. We also added taxonomy files that can help with the interpretation of BLAST results.

Clustering the NCBI nr database to reduce database size and enable faster BLAST searches

The increasingly large number of sequences available in public databases makes searches slower and slower. We clustered the NCBI non-redundant protein database and calculated taxonomic info for each cluster. This collapses similar sequences and reduces the database by over half.

As we continue using computation to address biological questions, we will keep our focus on making our approach and products useful. As we iterate and find the approaches that work best for our organization, we’ll keep documenting the products we make, how those products are used within and outside of Arcadia, and the thoughts behind our decisions.